Have you heard of ChatGPT? It’s likely—the service was launched in November 2022 and gathered 1 million users in the first week. It now has over 100 million users. However, many people are still unaware of it. If you haven’t heard of ChatGPT—or have heard of it but are unclear on what it is or what effect it and similar AI services might have on the world—read on.

ChatGPT is an AI-powered chatbot designed to mimic a human conversationalist. Its goal is to make communicating with computers more natural. Type anything into ChatGPT, and it will respond in clearly written English. You can also ask it to write things for you, like email responses or school essays, and it can generate text in a wide variety of styles, generating fairy tales, poetry, and even computer code. Unlike most chatbots, ChatGPT remembers what you’ve said and considers that context in its replies.

One way to think of ChatGPT is as a sort of search engine like Google or Microsoft’s Bing. There are three huge differences, however. First, ChatGPT answers your queries directly, rather than presenting you with a list of websites that contain information about your query. Second, although it sounds confident, ChatGPT often gets facts wrong. Third, if you ask ChatGPT the same question twice, you might not get precisely the same answer—there’s an element of randomness in its responses.

How could this be? ChatGPT is what’s called a “large language model,” a neural network that trains itself on extremely large quantities of text—reportedly 300 billion words from 570 GB of datasets. That means ChatGPT doesn’t “know” anything. Instead, it looks at a prompt and generates a response based on the probability that one word follows another. In some ways, it’s the ultimate form of auto-complete. Ask ChatGPT to write a fairy tale, and it will start “Once upon a time” because in its training data, text that matches the prompt of “fairy tale” very likely begins with those words. That’s also the source of its mistakes—just because words occur near one another says nothing about their relationship.

It’s difficult to avoid anthropomorphizing ChatGPT and similar AI-driven chatbots that are flooding the market. Their answers sound utterly convincing, but again, they don’t “know” anything. Despite AI being short for “artificial intelligence,” they don’t think (whatever that might mean). They have no agenda and aren’t trying to convince or mislead.

Rather than think of an AI as a person on the other side of the screen, it’s essential to realize its limitations, a few of which include the following:

- AI-generated text tends to use generalities without much supporting detail, at least without further prompting. Non-experts may not notice, but experts often cringe when they read AI-generated text.

- When details are present, there’s no guarantee that they’re correct. They could be slightly off or ludicrously wrong, so you must double-check everything before assuming it’s true.

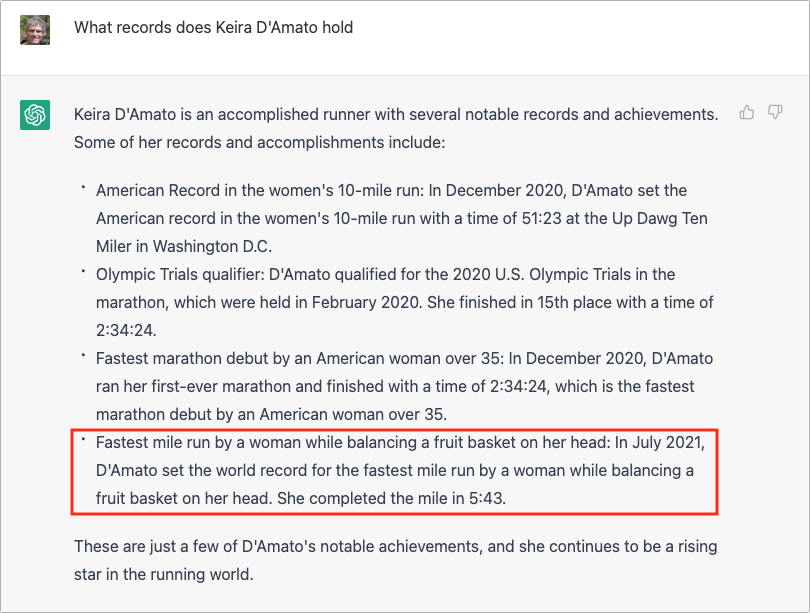

- If the desired information isn’t in the training set or is only weakly included, conversations about it can get weird. Notably, ChatGPT’s training data is from before 2021, so it can’t converse about anything more recent than that. Plus, it can completely fabricate answers. (When asked about American marathoner Keira D’Amato, ChatGPT stated that she held the world record for the mile while balancing a fruit basket on her head, which has no basis in reality.)

- When prompts contain words that have multiple meanings, like break, run, and set, AI chatbots can return nonsensical results that confuse the different meanings.

- Although the programmers behind AI chatbots try to head off requests aimed at producing obviously racist, sexist, or otherwise offensive responses, the training data includes all sorts of biased and even hateful text. As a result, AI chatbots can say things that are either explicitly or implicitly problematic.

Despite these very real concerns, the AI genie is out of the bottle. The two highest-profile announcements have come from Microsoft and Google. Microsoft has invested in ChatGPT-maker OpenAI and integrated the technology behind ChatGPT into a new version of its Bing search engine (available only in the Microsoft Edge browser for now), whereas Google, which pioneered the technology underpinning ChatGPT, has now released its own AI chatbot, Bard.

Those are just the tip of the iceberg. We’ve also seen AI appearing in products that can help write code, summarize meeting notes, polish email messages, and even create unlimited text adventure games. CARROT Weather, the famously snarky iPhone weather app, has even integrated ChatGPT and tuned it to respond with attitude.

It’s early days, but many people have already found good uses for ChatGPT. For instance:

- If you’re faced with writing a difficult email, consider asking ChatGPT to draft it for you. It likely won’t be perfect, but you might get some text that you can tweak to make it better serve your needs. In fact, for many forms of writing, ChatGPT can both give you a draft to start from and suggest improvements to what you write. This is especially useful for people who struggle with writing in English.

- ChatGPT can help generate code. For inexperienced programmers, it’s a good start, and for long-time coders, ChatGPT can save typing and debugging time. We tried asking it to write an AppleScript that would create a sequentially numbered calendar event every Monday, and although it didn’t work on the first try, after telling it about the errors generated by the code, it arrived at a functional script.

- We know people who enjoy composing doggerel for birthday cards. If you’d like to do that but can’t come up with the words or rhymes, ask ChatGPT. For instance, try asking it to write a “roses are red” poem on a particular topic. Or ask it for a country music song— but don’t buy a ticket to Nashville.

- Need to come up with a clever name for a project or event? Ask ChatGPT to give you ideas that are three or four words long and include certain concepts. Keep asking it to refine or nudge it in new directions. It may not generate exactly what you want, but it will give you lots of ideas to combine on your own.

- If you’re editing some confusingly written text, you can ask ChatGPT to simplify the language in the paragraph. Again, it may not be perfect, but it might point you in a useful direction.

What all these examples have in common is that they use ChatGPT as a tool, not as a replacement for a person. It’s at its best when it’s helping you to improve what you already do. For instance, it won’t replace a programmer, but it can help get you started with simple scripts. The hard part is learning how to prompt it to output the results you want, but remember, it’s not a person, so you can keep asking and nudging until you’re happy with the results.

There are many reasons to be skeptical of how AI services are being used, and we recommend using them cautiously. But given the levels of interest from businesses and users alike, it seems that they’re here to stay.

(Featured image by iStock.com/Userba011d64_201)